Galera Cluster Limitations and Recovery

This instruction lists the most common limitations and problems when hosting a MariaDB Galera Cluster at the platform. Follow this guide to find possible issues and recovery solutions to the already occurred problems:

- Galera Cluster Limitations

- Stop/Start/Restart Specifics

- Node with Maximum Transactions

- Starting Cluster after Crash

- Single Node Failure

- Monitoring Galera Cluster

Galera Cluster Limitations

You can find a complete list of the Galera Cluster limitations on the official website.

Below, we’ll highlight the most relevant ones to the platform.

1. All tables must contain the Primary Key.

To search for tables without Primary Key, you need to run the following query:

| |

2. MyISAM tables.

An experimental parameter wsrep_replicate_myisam for supporting MyISAM tables has been added to the configuration file.

Stop/Start/Restart Specifics

The main condition for stopping a cluster is a sequential shutdown of all its nodes. The last container sets itself in bootstrap, which means that the cluster will start from this node.

The platform automates such a flow so that you don’t need to perform any additional actions. You can operate Galera Cluster just as any other regular environment and start/stop/restart via the dashboard. The special events will perform all the required actions (like the sequential withdrawal of nodes from the cluster) in the background.

When you restart just one node of the cluster, the actions are standard.

Node with Maximum Transactions

Before performing any actions, we highly recommend creating a backup for the /var/lib/mysql directory on each cluster node.

When performing the cluster recovery operations, you need to know the node with the highest sequence number of the last transaction (as the cluster should be started from this node). You can get the sequence number of the last transaction from the seqno value in the /var/lib/mysql/grastate.dat file of each node:

| |

Usually, you just need to select a node with the highest parameter value. If all or several nodes have the same highest value, choose any of them (preferably the master container of the layer).

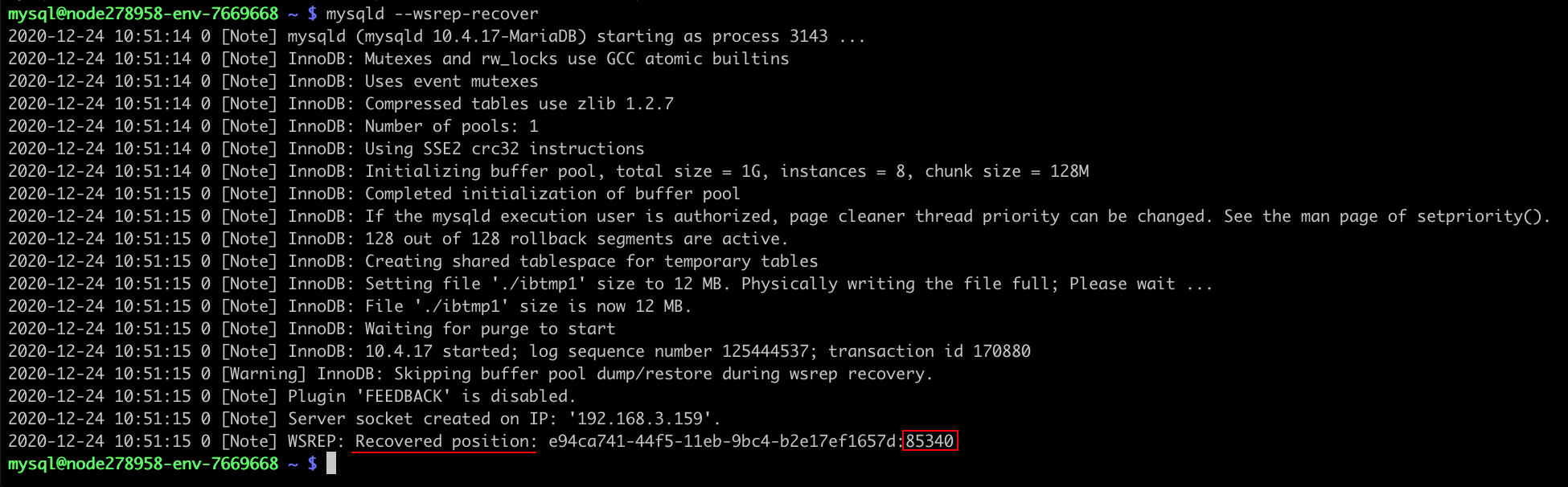

However, if at least one of the nodes has the -1 value, you cannot be sure that nodes are consistent (the parameter is reset to -1 when the service is restarted on a non-working cluster). In such a case, you need to recover data by starting mysqld with the –wsrep-recover parameter:

| |

Search output for the Recovered position data - check the value at the very end of the line after the colons (85340 in the example below):

| |

Compare the Recovered position on all nodes. The one with the highest value should be used for bootstrap. Once again, choose any node if multiple ones have the highest value. Next, set the safe_to_bootstrap variable to 1 in the grastate.dat file and bootstrap from this node.

Starting Cluster after Crash

1. The mysql process on nodes may hang after the cluster crash. It may be displayed as “running”, but you cannot perform normal operations like establishing a connection or stopping the process in a standard way (via the init script).

So, before starting a cluster, ensure that the mysql processes are not running on the nodes. The hang processes must be killed manually.

2. After killing the mysql processes, restart all your MySQL containers.

3. Check the value of the safe_to_bootstrap parameter in the /var/lib/mysql/grastate.dat file - it should be 0.

| |

4. On the node with maximum transactions, set safe_to_bootstrap to 1 and start the mysql process.

| |

5. Next, sequentially start mysql on the remaining nodes:

| |

If mysql is successfully started on the second node, you can proceed to the next ones.

However, in case of an error, check mysqld.log on this second node. Look for a message similar to the following one:

| |

If such a record exists, your second node has more transactions than the initially selected one (i.e. the first node where you set safe_to_bootstrap to 1). Please, return to the beginning of this section and start it anew, using the second node in the fourth step.

Single Node Failure

The most frequent cause of a node crash is the impossibility of processing a request due to some ignored limitations. You can check the /var/log/mysql/mysqld.log log for such errors.

In order to restore a node, you need to:

- ensure no mysql processes are running on the node

- set the safe_to_bootstrap parameter to 0 in the /var/lib/mysql/grastate.dat file

- restart the node via the init script

| |

Monitoring Galera Cluster

You can check the state and various parameters of the cluster by specifying them in the SHOW GLOBAL STATUS LIKE command on any node of the cluster. Depending on the provided value, you can see the different aspects of the cluster. For example:

| |

If your cluster includes ProxySQL nodes, the status may be checked by executing the following request on any of the ProxySQL nodes:

| |

All nodes must be in ONLINE status.